Agentic RAG using DeepSeek R1, Qdrant, and Langchain

Over the past decade, artificial intelligence has grown rapidly, with Large Language Models (LLMs) changing the way we interact with machines. While these models are powerful, they depend on pre-existing data and struggle with real-time updates or complex reasoning. Retrieval-Augmented Generation (RAG) was developed to solve this by integrating external knowledge sources. However, standard RAG systems often fall short when dealing with multi-step reasoning or adapting to changing queries.

Agentic RAG takes this concept further by adding autonomous AI agents to the retrieval process. These agents can adjust queries, interact with multiple knowledge sources, and validate the relevance of retrieved data. Unlike traditional RAG, Agentic RAG continuously refines information, leading to more accurate and reliable responses.

In this blog, we will explore what Agentic RAG is, why it is better than traditional RAG, and how to implement it using DeepSeek, Qdrant, and LangChain.

Agentic RAG Paper https://arxiv.org/pdf/2501.09136

What is Agentic RAG

Agentic RAG is an advanced technique of Retrieval-Augmented Generation (RAG) that integrates AI agents to dynamically refine, optimize, and improve the retrieval and response generation process. Unlike standard RAG, which follows a fixed pipeline of query, retrieve, and generate, Agentic RAG adds cognitive abilities to the pipeline. These abilities include planning, self-correction, and adaptive retrieval based on the complexity of user queries. Instead of simply fetching documents, Agentic RAG actively makes decisions about what information to retrieve and how to process it.

Core Components

Agentic RAG consists of several critical components that enable its enhanced retrieval and reasoning capabilities. These components work together to ensure efficient knowledge retrieval, validation, and response generation. Below are the key components explained in detail:

- Autonomous Decision-Making: Unlike traditional RAG, Agentic RAG empowers AI agents to make intelligent decisions regarding the retrieval process. These agents assess the complexity of a query, decide which retrieval method to use, and determine whether additional refinement is needed before generating a response.

- Multi-Source Information Fusion: Traditional RAG systems typically retrieve data from a single knowledge base. In contrast, Agentic RAG integrates multiple data sources such as vector databases, APIs, real-time web searches, and proprietary knowledge bases. This multi-source approach ensures richer, more contextually accurate responses.

- Iterative Query Refinement: The retrieval process in Agentic RAG is not a one-time operation. AI agents can reformulate and refine queries based on intermediate results. If the initially retrieved documents do not fully answer the query, the agent adjusts its search parameters and retrieves additional information.

- Validation and Self-Correction: Agentic RAG employs multiple levels of validation before finalizing a response. AI agents cross-verify retrieved documents, discard irrelevant or contradictory data, and refine the final output to ensure reliability. This significantly reduces hallucinations and misinformation, a common problem in traditional LLM-based systems.

- Adaptive Retrieval Strategies: Instead of relying on a fixed pipeline, Agentic RAG adapts retrieval strategies based on the nature of the query. For instance, if a query requires factual data, the system may prioritize vector search in structured databases, whereas for evolving knowledge, it may utilize real-time web search.

- Memory and Context Awareness: Agentic RAG systems maintain a short-term and long-term memory to preserve context across multi-turn interactions. This allows for a more dynamic and interactive user experience, where the AI agent builds upon previous responses rather than treating each query independently.

- Tool-Enabled Reasoning: AI agents in Agentic RAG can leverage external tools such as calculators, code execution environments, and structured data query engines to enhance their responses. This tool-use capability allows them to go beyond basic retrieval and engage in problem-solving tasks.

Why is Agentic RAG Better than Traditional RAG?

Traditional RAG has been a major step forward in AI-driven knowledge retrieval, allowing models to generate more contextualized responses. However, it has several limitations, such as static workflows, a single retrieval step, and a lack of intelligent reasoning. These constraints make it difficult to handle complex queries or adapt dynamically.

Agentic RAG overcomes these challenges by introducing AI agents that intelligently navigate and interact with multiple knowledge sources. Unlike traditional RAG, agentic systems can refine searches iteratively, validate retrieved data, and ensure higher accuracy in responses.

Here’s how Agentic RAG improves upon traditional RAG:

- More Accurate Responses – Validates sources before generating an answer.

- Handles Complex Queries – Supports multi-step reasoning and dynamic information retrieval.

- Adaptive & Flexible – Continuously adjusts search strategies based on query intent.

- Automates Workflows – AI agents manage retrieval, validation, and filtering automatically.

Traditional RAG vs. Agentic RAG

| Feature | Traditional RAG | Agentic RAG |

|---|---|---|

| Retrieval Strategy | Static | Adaptive & Dynamic |

| Query Processing | One-Shot | Multi-Step & Iterative |

| Data Sources | Limited | Web, APIs, Vector DBs |

| Validation | No | AI-Driven Filtering |

| Automation | No | Yes, with AI Agents |

With Agentic RAG, AI-driven retrieval becomes more reliable, real-time, and precise, making it ideal for enterprise-level AI applications that require advanced reasoning and automation.

Implementation of Agentic RAG

For the complete code check out this Notebook. Also, if you are interested in learning advanced RAG techniques, check out the GitHub repository we created.

Before diving into the implementation, ensure you have the necessary libraries installed and environment variables configured.

# install dependencies

!pip install --upgrade --quiet athina-client langchain langchain_community pypdf langchain-deepseek-official langchain-huggingface

# set api key

import os

from google.colab import userdata

os.environ['ATHINA_API_KEY'] = userdata.get('ATHINA_API_KEY')

os.environ['TAVILY_API_KEY'] = userdata.get('TAVILY_API_KEY')

os.environ['DEEPSEEK_API_KEY'] = userdata.get('DEEPSEEK_API_KEY')

os.environ['QDRANT_API_KEY'] = userdata.get('QDRANT_API_KEY')Next, load your documents (e.g., PDFs) and prepare them for retrieval. Split the document into chunks with overlap for better context retrieval.

# load pdf

from langchain_community.document_loaders import PyPDFLoader

loader = PyPDFLoader("/content/tesla_q3.pdf")

documents = loader.load()

# split documents

from langchain.text_splitter import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=0)

documents = text_splitter.split_documents(documents)

# load embedding model

from langchain_huggingface import HuggingFaceEmbeddings

embeddings = HuggingFaceEmbeddings(model_name="BAAI/bge-small-en-v1.5", encode_kwargs = {"normalize_embeddings": True})

Now, create a vector store using Qdrant to store document embeddings.

# create vectorstore

from langchain_community.vectorstores import Qdrant

vectorstore = Qdrant.from_documents(

documents,

embeddings,

url="your_qdrant_url",

prefer_grpc=True,

collection_name="documents",

api_key=os.environ["QDRANT_API_KEY"],Then define retrievers from the vector store

# create retriever

retriever = vectorstore.as_retriever()After that, define the web search component using Tavily .

# define web search

from langchain_community.tools.tavily_search import TavilySearchResults

web_search_tool = TavilySearchResults(k=10)Now load the deepseek R1 model for generating the response.

# load llm

from langchain_deepseek import ChatDeepSeek

llm = ChatDeepSeek(model="deepseek-reasoner")Then create vector search and web search functions for the tool call

# define vector search

from langchain.chains import RetrievalQA

def vector_search(query: str):

qa_chain = RetrievalQA.from_chain_type(llm=llm, retriever=retriever)

return qa_chain.run(query)

# define web search

def web_search(query: str):

return web_search_tool.run(query)

Next, configure tools the agent can use, such as the vector retriever and web search:

# create tool call for vector search and web search

from langchain.tools import tool

@tool

def vector_search_tool(query: str) -> str:

"""Tool for searching the vector store."""

return vector_search(query)

@tool

def web_search_tool_func(query: str) -> str:

"""Tool for performing web search."""

return web_search(query)# define tools for the agent

from langchain.agents import Tool

tools = [

Tool(

name="VectorStoreSearch",

func=vector_search_tool,

description="Use this to search the vector store for information."

),

Tool(

name="WebSearch",

func=web_search_tool_func,

description="Use this to perform a web search for information."

),

]After that, Define a system prompt for the agent.

# define system prompt

system_prompt = """Respond to the human as helpfully and accurately as possible. You have access to the following tools: {tools}

Always try the \"VectorStoreSearch\" tool first. Only use \"WebSearch\" if the vector store does not contain the required information.

Use a json blob to specify a tool by providing an action key (tool name) and an action_input key (tool input).

Valid "action" values: "Final Answer" or {tool_names}

Provide only ONE action per

JSON_BLOB

```

Observation: action result

... (repeat Thought/Action/Observation N times)

Thought: I know what to respond

Action:

```

{{

"action": "Final Answer",

"action_input": "Final response to human"

}}

Begin! Reminder to ALWAYS respond with a valid json blob of a single action.

Respond directly if appropriate. Format is Action:```$JSON_BLOB```then Observation"""

# human prompt

human_prompt = """{input}

{agent_scratchpad}

(reminder to always respond in a JSON blob)"""# create prompt template

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

prompt = ChatPromptTemplate.from_messages(

[

("system", system_prompt),

("human", human_prompt),

]

)# tool render

from langchain.tools.render import render_text_description_and_args

prompt = prompt.partial(

tools=render_text_description_and_args(list(tools)),

tool_names=", ".join([t.name for t in tools]),

)Finally, create the RAG pipeline and the agent.

# create rag chain

from langchain.schema.runnable import RunnablePassthrough

from langchain.agents.output_parsers import JSONAgentOutputParser

from langchain.agents.format_scratchpad import format_log_to_str

chain = (

RunnablePassthrough.assign(

agent_scratchpad=lambda x: format_log_to_str(x["intermediate_steps"]),

)

| prompt

| llm

| JSONAgentOutputParser()

)# create agent

from langchain.agents import AgentExecutor

agent_executor = AgentExecutor(

agent=chain,

tools=tools,

handle_parsing_errors=True,

verbose=True

)Now test Agentic RAG using the following method.

agent_executor.invoke({"input": "Total automotive revenues Q3-2024"})Response:

> Entering new AgentExecutor chain...

Thought: I need to find the total automotive revenues for Q3 2024. I should first check my vector store for this information.

Action:

```

{

"action": "VectorStoreSearch",

"action_input": "Total automotive revenues Q3-2024"

}

```

Total automotive revenues in Q3-2024 were $20,016 million.

Action:

```

{

"action": "Final Answer",

"action_input": "Total automotive revenues in Q3-2024 were $20,016 million."

}

```

> Finished chain.

{'input': 'Total automotive revenues Q3-2024',

'output': 'Total automotive revenues in Q3-2024 were $20,016 million.'}Then execute multiple queries for the evaluation part.

# create agent with verbose=False for production

agent_output = AgentExecutor(

agent=chain,

tools=tools,

handle_parsing_errors=True,

verbose=False

)# Create dataset

question = [

"What milestones did the Shanghai factory achieve in Q3 2024?",

"Tesla stock market summary for 2024?"

]

response = []

contexts = []

# Inference

for query in question:

vector_contexts = retriever.get_relevant_documents(query)

if vector_contexts:

context_texts = [doc.page_content for doc in vector_contexts]

contexts.append(context_texts)

else:

print(f"[DEBUG] No relevant information in vector store for query: {query}. Falling back to web search.")

web_results = web_search_tool.run(query)

contexts.append([web_results])

# Get the agent response

result = agent_output.invoke({"input": query})

response.append(result['output'])# To dict

data = {

"query": question,

"response": response,

"context": contexts,

}Next, connect this data to Athina IDE to evaluate the performance of our Agentic RAG pipeline.

# Format the data for Athina

rows = []

for i in range(len(data["query"])):

row = {

'query': data["query"][i],

'context': data["context"][i],

'response': data["response"][i],

}

rows.append(row)#connect to Athina

from athina_client.datasets import Dataset

from athina_client.keys import AthinaApiKey

AthinaApiKey.set_key(os.environ['ATHINA_API_KEY'])

try:

Dataset.add_rows(

dataset_id='10a24e8f-3136-4ed0-89cc-a35908897a46',

rows=rows

)

except Exception as e:

print(f"Failed to add rows: {e}")In the Datasets section on Athina IDE, you will find the Create Dataset option in the top right corner. Click on it and select Login via API or SDK to get thedataset_idandAthina API key.

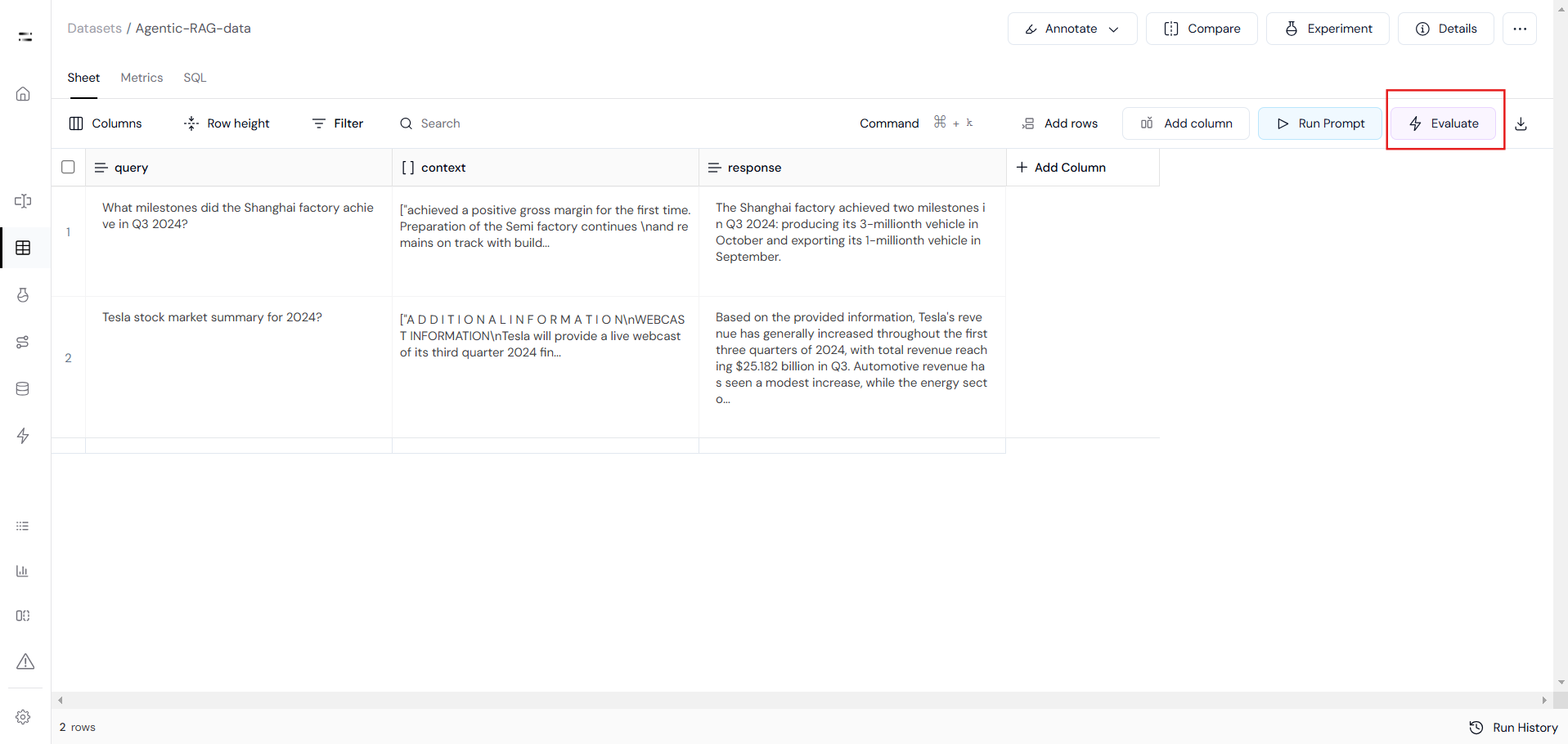

After connecting the data using the Athina SDK, you can access your data at https://app.athina.ai/develop/ {{your_data_id}}

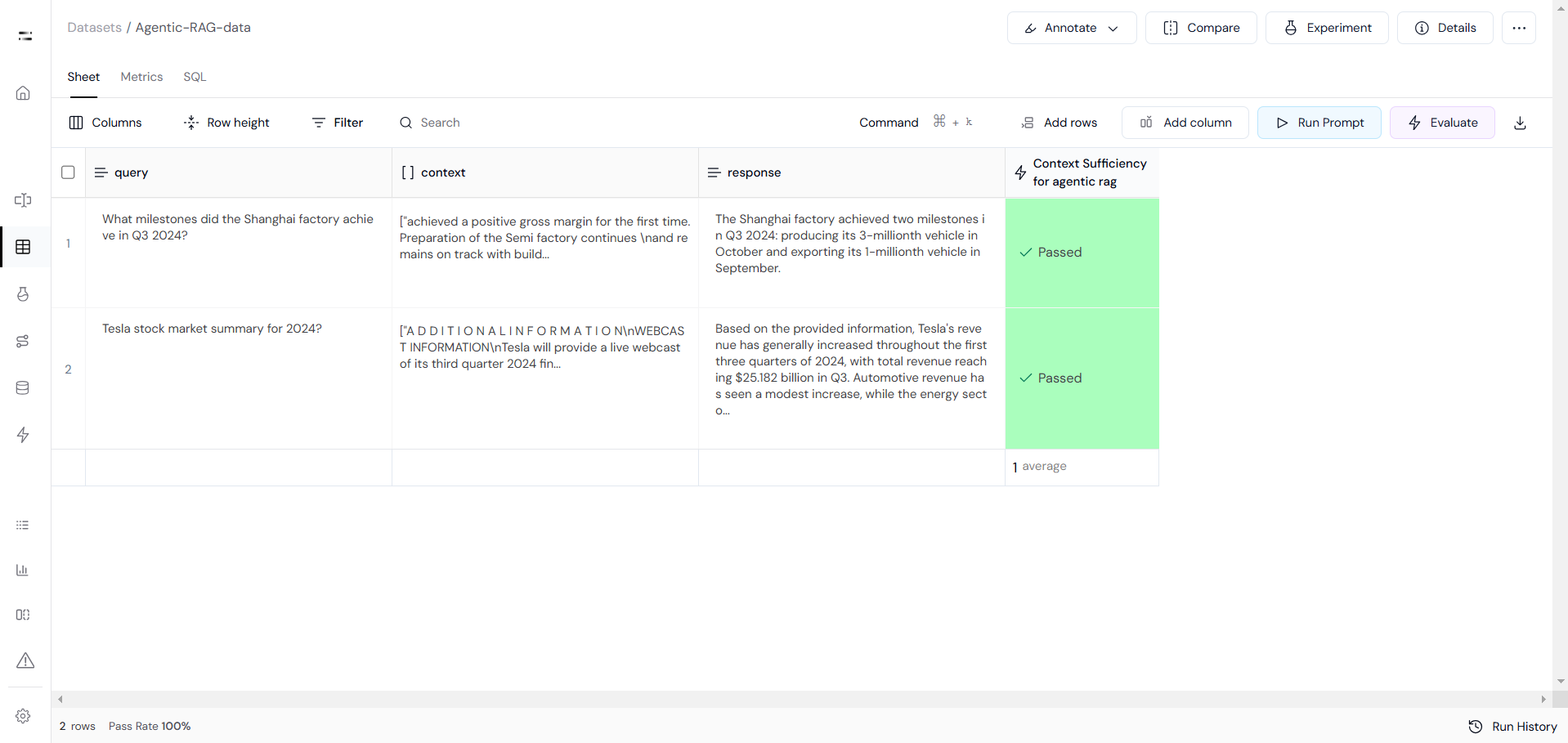

This will look something like Image-1. Also, you can run various evaluations to test the performance of our Agentic RAG in the "Evaluate" section located in the top right corner. Out for Evaluation will look something like Image-2

Image1

Image2

Conclusion

Agentic RAG enhances AI-driven retrieval by making it more dynamic, accurate, and adaptable. By integrating AI agents, it improves search strategies, validation, and multi-step reasoning, making responses more reliable.

This approach is ideal for applications requiring real-time knowledge and intelligent decision-making. With tools like LangChain, DeepSeek, and Qdrant, developers can easily implement Agentic RAG to build smarter AI systems.

If you want to explore more advanced + agentic RAG techniques, check out the GitHub Repository.