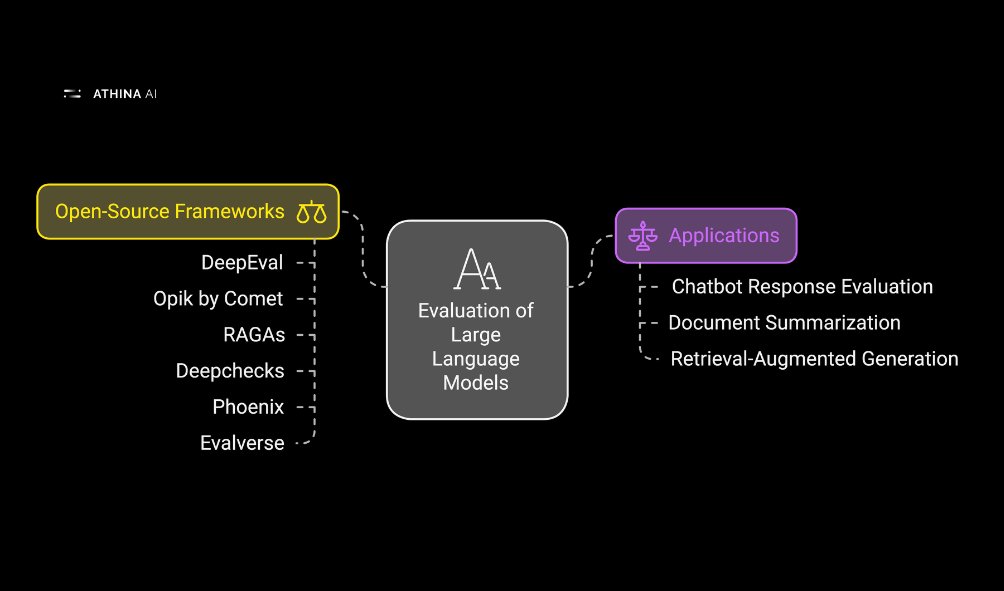

Top 6 Open-Source Frameworks for Evaluating Large Language Models

Evaluating Large Language Models (LLMs) is crucial for ensuring their effectiveness in applications like chatbots, document summarization, and retrieval-augmented generation (RAG). Open-source frameworks simplify this process by providing tools to assess various aspects of LLM performance. In this article, we explore the top 6 open source LLM evaluation frameworks with unique examples. Lets dive in.

1. DeepEval

DeepEval provides a suite of over 14 evaluation metrics to assess LLMs, including summarization accuracy and hallucination detection. It integrates seamlessly with Python's Pytest, enabling evaluations to be performed like unit tests.

Key Features:

- Over 14 evaluation metrics.

- Pytest integration.

- Synthetic dataset generation.

Example: Summarization Accuracy Test

from deepeval.metrics import SummarizationMetric

from deepeval.test_case import LLMTestCase

# Define the test case

test_case = LLMTestCase(

input="Summarize the article: Open-source AI tools are rising in popularity...",

actual_output="Open-source AI tools are becoming widely used.",

expected_output="Open-source AI tools are gaining traction."

)

# Evaluate with summarization metric

metric = SummarizationMetric(threshold=0.8)

score = metric.evaluate(test_case)

print(f"Summarization score: {score}")

2. Opik by Comet

Opik is an open-source platform by Comet for evaluating, testing, and monitoring Large Language Models (LLMs). It provides flexible tools to track, annotate, and refine LLM applications across development and production environments.

Key Features:

- Log and monitor all LLM calls for debugging and optimization.

- Add feedback and scores to improve evaluation processes.

- Experiment with prompts and models interactively.

- Automate testing with metrics for RAG, hallucination detection, and more.

Example: Hallucination Evaluation

from opik.evaluation.metrics import Hallucination

metric = Hallucination()

score = metric.score(

input="What is the capital of France?",

output="Paris",

context=["France is a country in Europe."]

)

print(score)3. RAGAs

RAGAs focuses on evaluating Retrieval-Augmented Generation pipelines, emphasizing metrics like Faithfulness and Contextual Precision.

Key Features:

- RAG-specific metrics.

- Detailed error analysis.

Example: Evaluating Contextual Precision

from ragas import SingleTurnSample, EvaluationDataset, evaluate

from ragas.metrics import ContextualPrecision

# Define inputs and outputs

samples = [

SingleTurnSample(

user_input="Who wrote '1984'?",

retrieved_contexts=["George Orwell wrote '1984'."],

response="George Orwell",

reference="George Orwell"

)

]

# Create an EvaluationDataset

evaluation_dataset = EvaluationDataset.from_list(samples)

# Instantiate the ContextualPrecision metric

contextual_precision = ContextualPrecision()

# Evaluate precision

results = evaluate(evaluation_dataset, [contextual_precision])

print(f"Contextual Precision Score: {results['contextual_precision']}")

4. Deepchecks

Deepchecks is a modular framework that supports various LLM evaluation tasks, including dataset bias detection and model performance.

Key Features:

- Bias and fairness detection.

- Supports diverse LLM evaluation tasks.

Example: Bias Detection

import deepchecks_llm as dc_llm

from deepchecks_llm import DeepchecksLLMClient, LogInteractionType, EnvType, ApplicationType

# Initialize the Deepchecks LLM client with your API key

dc_client = DeepchecksLLMClient(api_token='YOUR_API_KEY')

# Create a new application for evaluation

app_name = "BiasDetectionApp"

dc_client.create_application(app_name, ApplicationType.QA)

# Define your input data

inputs = [

{"question": "What is the best programming language?", "answer": "Python"},

{"question": "What language is best for data science?", "answer": "R"}

]

# Simulate model responses (replace this with actual model inference)

model_responses = [

"Python is widely considered the best programming language.",

"R is often chosen for data science tasks."

]

# Log interactions

interactions = [

LogInteractionType(

user_interaction_id=str(idx),

input=item["question"],

output=model_responses[idx],

annotation=item["answer"]

)

for idx, item in enumerate(inputs)

]

# Log the batch of interactions

dc_client.log_batch_interactions(

app_name=app_name,

version_name="v1",

env_type=EnvType.EVAL,

interactions=interactions

)

# Run the bias detection suite

bias_suite = dc_llm.suites.bias_suite()

suite_result = bias_suite.run(app_name=app_name, version_name="v1")

# Display the results

suite_result.show()

5. Phoenix

Phoenix is an open-source AI observability platform that makes it easy to experiment, evaluate, and troubleshoot AI applications. It works seamlessly with frameworks like LangChain, LlamaIndex, and Haystack, and supports LLM providers like OpenAI, Bedrock, and VertexAI.

Key Features:

- Monitor LLM runtime with OpenTelemetry instrumentation.

- Benchmark performance using response and retrieval evaluations.

- Create versioned datasets for experimentation and fine-tuning.

- Track changes to prompts, models, and retrieval processes.

Example: Hallucination Evaluation

import nest_asyncio

from phoenix.evals import HallucinationEvaluator, OpenAIModel, QAEvaluator, run_evals

nest_asyncio.apply() # This is needed for concurrency in notebook environments

# Set your OpenAI API key

eval_model = OpenAIModel(model="gpt-4o")

# Define your evaluators

hallucination_evaluator = HallucinationEvaluator(eval_model)

qa_evaluator = QAEvaluator(eval_model)

df["context"] = df["reference"]

df.rename(columns={"query": "input", "response": "output"}, inplace=True)

assert all(column in df.columns for column in ["output", "input", "context", "reference"])

# Run the evaluators, each evaluator will return a dataframe with evaluation results

# We upload the evaluation results to Phoenix in the next step

hallucination_eval_df, qa_eval_df = run_evals(

dataframe=df, evaluators=[hallucination_evaluator, qa_evaluator], provide_explanation=True

)6. Evalverse

Evalverse unifies multiple evaluation frameworks and allows for integration with collaboration tools like Slack for streamlined evaluations.

Key Features:

- Unified framework for multiple evaluation tools.

- Slack integration for no-code evaluations.

Example: Collaborative Evaluation Request

import evalverse as ev

# Initialize the evaluator

evaluator = ev.Evaluator()

# Specify the model and benchmark

model = "upstage/SOLAR-10.7B-Instruct-v1.0"

benchmark = "h6_en"

# Run the evaluation

evaluator.run(model=model, benchmark=benchmark)

Conclusion

These frameworks simplify the process of evaluating LLMs, each catering to specific needs. By choosing the right framework and integrating it into your workflow, you can ensure your models perform reliably and effectively. Start exploring these tools today and elevate the performance of your AI systems!

While open-source evaluation frameworks provide great features, they might not always meet the needs of organizations that require extensive flexibility for running custom evaluations. That’s where Athina AI comes in. It’s a powerful LLM evaluation platform designed to help enterprises build, test, and monitor AI features, tailored specifically to their unique requirements.