No Code Data Extraction and Contextual Summarisation: Automating Earnings Reports

We live in a world where data flows in from all directions and technology is evolving blazingly fast. Now to keep up with the pace of that advancement, its become really important that we automate our redundant tasks specially the ones with similar structures that consume valuable time. AI-powered workflows can help streamline these pipelines of repetitive tasks — enhancing efficiency, accuracy, and decision-making.

This article explores how we can leverage API powered search tools like Tavily and Exa for complex data extraction and LLMs for contextual understanding to create powerful financial reporting workflow.

But before we dive in, lets first discuss the thought process behind building this AI Workflow and understand the problem that we are trying to solve with this flow.

The Problem: Financial Data is Scattered and Volatile

Earnings reports are critical for investors and analysts, yet they are scattered across multiple sources—press releases, regulatory filings, news articles, and analyst reports. The challenge lies in automating data extraction from various sources in real-time while ensuring accuracy, relevance, and structured representation for downstream AI applications.

Traditional web scraping methods often fail due to dynamic content loading, bot prevention mechanisms, and unstructured data formats. This is where an AI pipeline written for web search and extraction tool, becomes invaluable.

How It Works: Practical Deep Dive

Now that we have understood the problem better, lets dive in to break down the flow:

Step 1: Automating Data Retrieval

Platform like Exa and Tavily are advanced API-powered tools designed for intelligent web search and structured data extraction. It enables developers to:

- Search for up-to-date content across diverse websites.

- Extract key details like facts, figures, and trends.

- Filter and rank results by relevance and confidence scores.

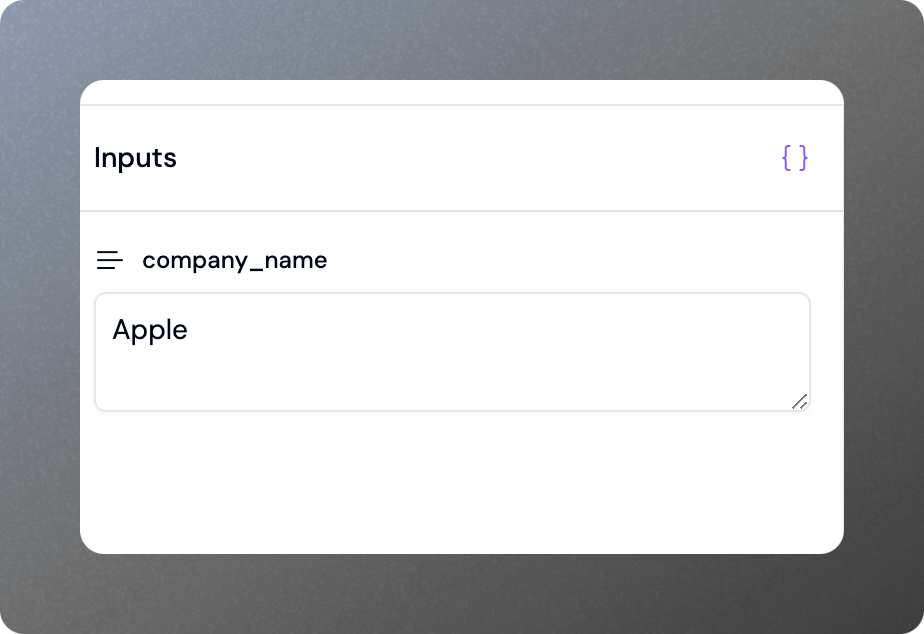

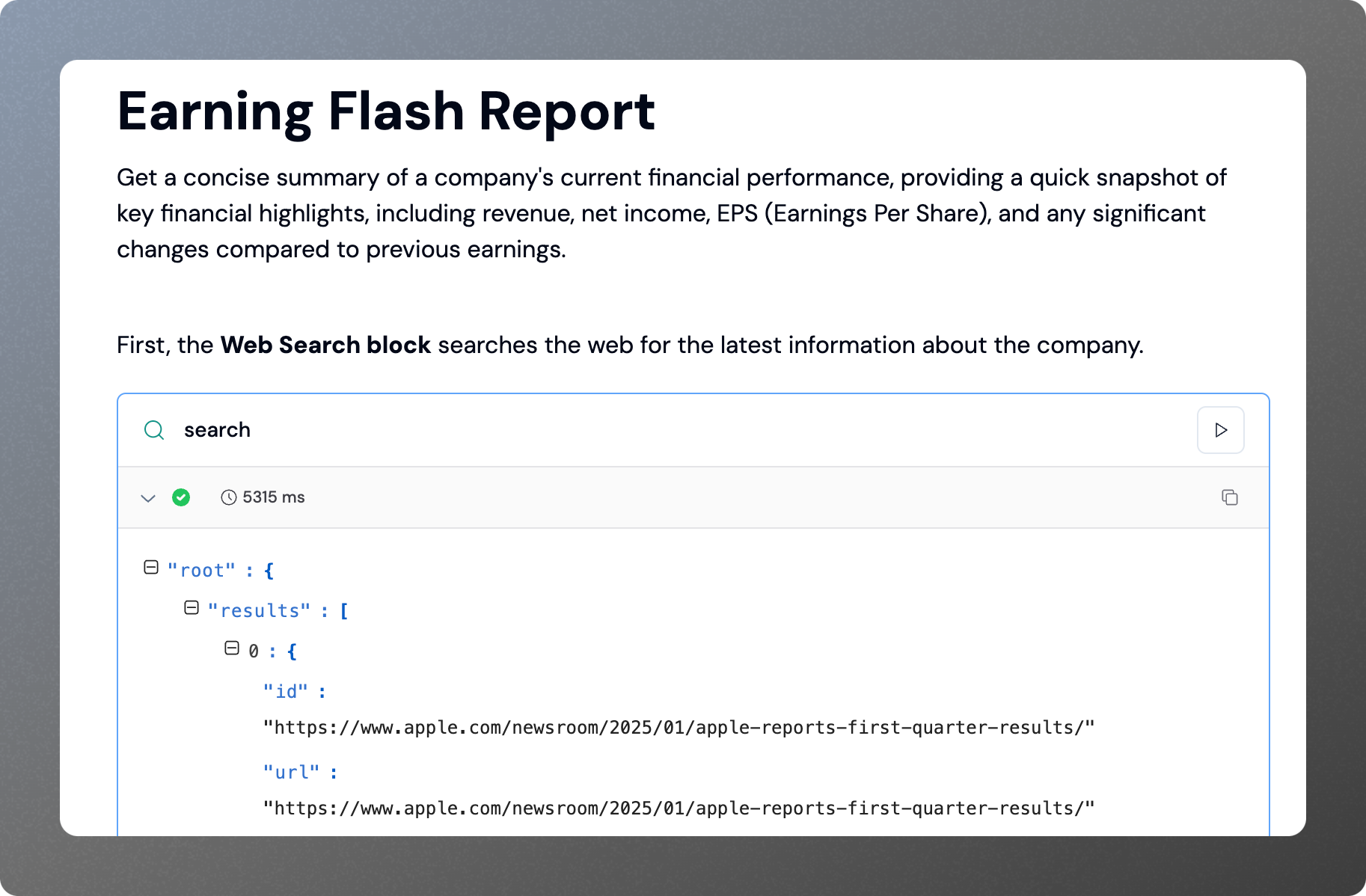

For example, in the screenshot shown below, an Input Block first prompts the user to enter the name of the company and then with a Search Block, we search the web using Exa to find out relevant results from reliable sources. The JSON output contains:

- Title: “Apple Reports First Quarter Results”

- Summary: “Q1 earnings report shows record revenue of $124.3 billion (4% YoY increase)…”

- Published Date: Timestamp to verify recency

This structured data is then passed to the next processing layer for intelligent summarization.

Step 2: Contextual Summarization Using LLMs

Once financial data is extracted, it needs to be summarized in a human-readable format. LLMs like GPT-4o are ideal for helping us understand:

- Understanding financial terminology and structuring data into readable insights.

- Identifying significant changes in earnings reports and contextualizing them.

- Generating concise yet insightful summaries that are directly usable by financial analysts and investors.

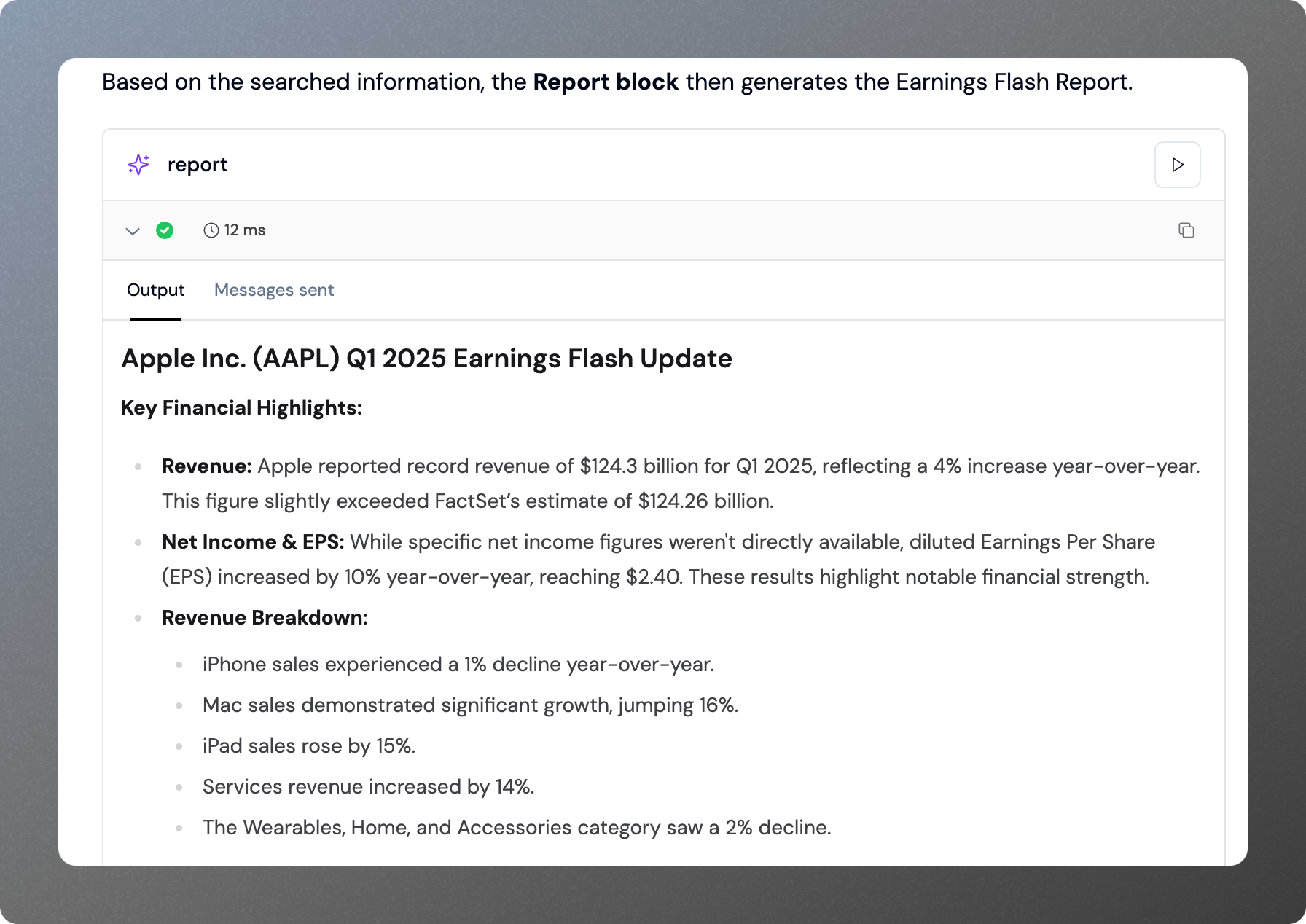

In the workflow, an LLM-based Report block takes structured input from Tavily and generates an “Earnings Flash Report.” The AI model intelligently formats:

- Key Financial Highlights: Revenue, Net Income, EPS

- Significant Changes: Trends, comparisons with previous quarters

- Upcoming Events: Shareholder meetings, product launches

This approach ensures that stakeholders receive real-time, digestible financial summaries without manually parsing through lengthy reports.

Try out the Flow here

Note: This methodology used in this flow can be extended beyond financial reports to market sentiment analysis, regulatory filings, and stock predictions and to other domains as well.

Why Athina Flows?

Everyone’s jumping on AI workflows to tackle redundant tasks, especially professionals overwhelmed with data. That’s where a No Code Platform like Athina Flows shines, empowering all to build advanced AI pipelines effortlessly. Here’s why it’s a good tool for your AI workflows:

- Versatile Pipelines – Automate web scraping, data extraction, translation, summarization, and image generation.

- No-Code Simplicity – Drag-and-drop interface to connect tools like Tavily Web Search, Slack, and APIs.

- LLM Integration – Use any Open or Closed-Source AI models for real-time processing.

- For All Skill Levels – Analysts can automate manual work; power users can add custom logic.

- Seamless Deployment – One-click execution with enterprise integrations and self-hosting options.

Final Thoughts

With Athina Flows, anyone can build powerful No Code AI Workflows leveraging basic components and custom blocks. Just like in this case, we built a pipeline for Data Extraction and LLMs for contextual analysis helping us with real-time insights.

Want to build your own no-code AI Workflows like these? Try Athina Flows and start integrating real-time knowledge from webpages into your AI projects today!