Top 5 Open Source Libraries to structure LLM Outputs

Large Language Models (LLMs) are revolutionizing industries with their ability to answer questions, generate content, and write code. But they often struggle with producing consistent, structured outputs. Ask for JSON, and you might get a mix of text and JSON—sometimes spot on, sometimes a mess. This makes them hard to use directly in production. In this blog, we’ll explore five open-source libraries to make LLM outputs reliable and ready for real-world applications.

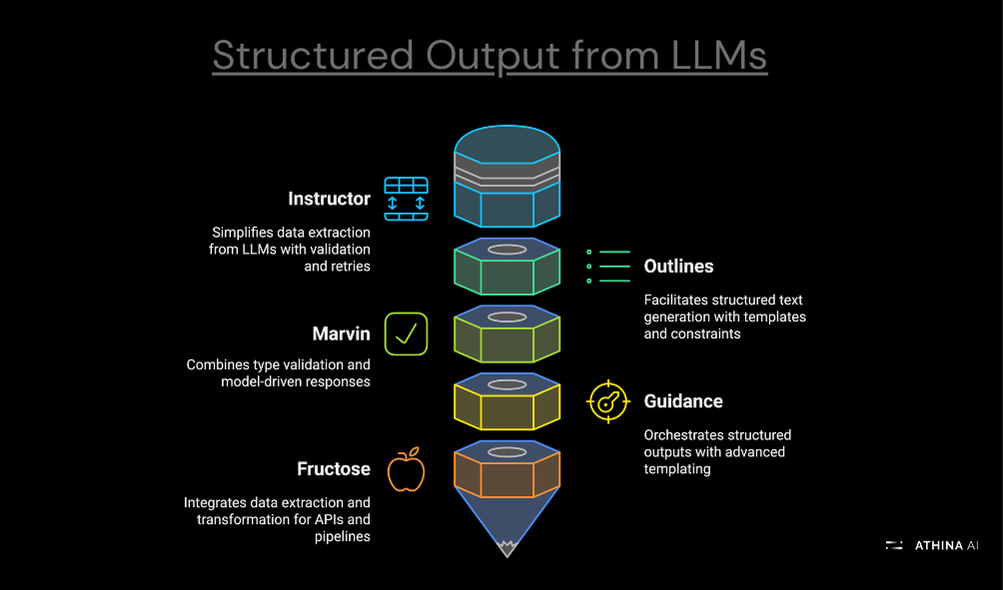

1. Instructor

Instructor is a Python library designed to simplify the extraction of structured data from LLMs. Built on top of Pydantic, it provides a user-friendly API to manage validation, retries, and streaming responses.

Key Features:

- Define response models using Pydantic.

- Automatic validation of LLM outputs against the defined models.

- Seamless integration with various LLM providers.

Example Usage:

import instructor

from pydantic import BaseModel

from openai import OpenAI

# Define the desired output structure

class UserInfo(BaseModel):

name: str

age: int

# Initialize the OpenAI client

openai_client = OpenAI(api_key="your-api-key")

# Patch the OpenAI client with Instructor

client = instructor.from_openai(openai_client)

# Query the LLM

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": "Provide user information in JSON format."}],

response_model=UserInfo

)

print(response)

In this example, the UserInfo Pydantic model defines the expected structure of the LLM's output. The response_model parameter ensures that the output conforms to this structure, providing automatic validation.

2. Outlines

Outlines is a Python library that facilitates structured text generation by allowing developers to define constraints and templates for LLM outputs. It supports multiple model integrations and offers advanced prompting features.

Key Features:

- Powerful prompting primitives based on the Jinja templating engine.

- Support for regex-structured generation and JSON schemas.

- Compatibility with various LLM backends, including OpenAI and Hugging Face Transformers.

Example Usage:

from outlines import prompt

# Define a prompt template

@prompt

def user_profile():

"""

Generate a user profile in JSON format with the following fields:

- name: string

- age: integer

- email: string

"""

pass

# Generate the structured output

response = user_profile()

print(response)

Here, the user_profile function uses a docstring to define the desired output structure. When called, it prompts the LLM to generate a user profile adhering to the specified format.

3. Marvin

Marvin is a library that combines type validation and model-driven responses to structure LLM outputs. It leverages Pydantic for defining and validating output schemas, ensuring that the generated data meets the specified requirements.

Key Features:

- Integration with Pydantic for schema validation.

- Extensible design for creating reusable workflows.

- Error handling for invalid or incomplete LLM outputs.

Example Usage:

from marvin import AI, schema

# Define a Pydantic schema

class UserProfile(schema.BaseModel):

name: str

age: int

email: str

# Initialize the AI model

ai = AI()

# Generate and validate the output

response = ai.generate(

prompt="Create a user profile in JSON format with name, age, and email.",

model=UserProfile

)

print(response)

In this snippet, the UserProfile schema defines the expected structure of the LLM's output. The generate method ensures that the output conforms to this schema, providing validation and error handling.

4. Guidance

Guidance is a library designed to orchestrate structured outputs by leveraging advanced prompt templating and response validation. It allows developers to define complex workflows and ensures that LLM outputs adhere to specified formats.

Key Features:

- Advanced templating for crafting precise LLM instructions.

- In-built support for JSON and other structured formats.

- Modular components for custom workflows.

Example Usage:

import guidance

import json

# Initialize the language model (replace 'your_model' with the actual model you're using)

llm = guidance.llms.OpenAI("gpt-3.5-turbo") # Example for OpenAI's GPT-3.5

# Define the JSON schema for the user profile

user_profile_schema = {

"type": "object",

"properties": {

"name": {"type": "string"},

"age": {"type": "integer"},

"email": {"type": "string", "format": "email"}

},

"required": ["name", "age", "email"]

}

# Define the guidance program with the JSON schema

program = guidance.Program(

llm=llm,

schema=user_profile_schema,

prompt="""

Generate a user profile in JSON format with the following fields:

- name: string

- age: integer

- email: string

"""

)

# Execute the program

response = program()

print(json.dumps(response, indent=2))

This example shows how to use the Guidance library to generate a user profile in JSON format, ensuring strict adherence to a defined schema. The Program class handles structured prompts and output management for consistent, reliable results.

5. Fructose

Fructose is a library that simplifies the process of structuring LLM outputs by integrating seamless data extraction and transformation capabilities. It is particularly effective for use cases like API responses and data pipelines.

Key Features:

- Easy-to-use interface for structured data extraction.

- Pre-built workflows for common formatting tasks.

- Flexible integration with other AI pipelines.

Example Usage:

from fructose import Fructose

# Initialize the Fructose client

ai = Fructose()

# Define a function with type annotations

@ai

def get_user_profile(name: str) -> dict:

"""

Generate a user profile in JSON format with the following fields:

- name: string

- age: integer

- email: string

"""

...

# Call the function

profile = get_user_profile("Alice")

print(profile)

Conclusion

It's all about finding the right balance. Depending on the complexity of your use case, you might find that one tool perfectly fits your needs, or you might need to combine multiple approaches to achieve the desired results. Here's a quick recap of the open-source libraries we explored in this blog:

- Instructor simplifies the process of guiding LLMs to generate structured outputs with built-in validation, making it great for straightforward use cases.

- Outlines excels at creating reusable workflows and leveraging advanced prompting for consistent, structured outputs.

- Marvin provides robust schema validation using Pydantic, ensuring data reliability, but it relies on clean inputs from the LLM.

- Guidance offers advanced templating and workflow orchestration, making it ideal for complex tasks requiring high precision.

- Fructose is perfect for seamless data extraction and transformation, particularly in API responses and data pipelines.

When building production-grade systems, combining these tools often yields the best results. Start with a clear and precise prompt, use schema validation for consistency, and employ tools like Guidance or Fructose to handle more complex scenarios. By leveraging these libraries, you can transform unstructured LLM outputs into reliable, structured data ready for your applications.